An introduction to Leaders in BI Tools by Gartner Analysis

- May 13, 2017

- Posted by: Nick Revych

- Category: Business Intelligence , Information Management ,

Gartner, Inc. (NYSE: IT) is the world’s leading information technology research and advisory company. Gartner works with clients to research, analyze and interpret the business of IT within the context of their individual roles. Most business users and analysts in organizations will have access to self-service tools to prepare data for analysis as part of the shift to deploying modern BI platforms.

Gartner defined the leaders in BI tools and comprise them across the four categories — infrastructure, data management, analysis and content creation and share findings.

Nick Revych will explain the Gartner’s analysis of the four product leaders for the last 3 years in BI sector. The following products are included in the group of leaders : QlikView, Tableau, Microsoft for Power BI and SAP Lumira 2.x. The strengths and weaknesses of these products will be explained. Outlined the best practices, or rather, what errors should be avoided when developing business analysis on these applications, based on the software architectural pattern MVC (Model, View, Controller).

Key Concepts

By reading this article, you will learn:

- What criteria are used in the Gartner’s analysis.

- The strengths and weaknesses of the leaders BI products.

- What is MVC software architectural pattern and why it’s important for Business Analysis.

- What errors should be avoided when developing analytical dashboards.

- Universal Data Model for building any analytical panels

Subscribe Now

Gartner defined the leaders in BI tools and comprise them across the four categories — infrastructure, data management, analysis and content creation and share findings.

Introduction.

This article is useful to those who want to receive or develop analytical panels and are faced with the choice of a tool for development.

Secondary you may ask how to correctly prepare the data model in order to obtain the desired analytics quickly, accurately and clearly.

After twenty years of working with various reports, I will talk about a universal recipe in the development and use of business analysis.

But let’s start in order…

Gartner analysis for last three years (2015,2016, 2017).

Gartner made analysis using 15 critical capabilities of a BI and Analytics :

- BI Platform Administration. Capabilities that enable scaling the platform, optimizing performance and ensuring high availability and disaster recovery.

- Cloud BI. Platform-as-a-service and analytic-application-as-a-service capabilities for building, deploying and managing analytics and analytic applications in the cloud, based on data both in the cloud and on-premises.

- Data Source Connectivity and Ingestion. Capabilities that allow users to connect to the structured and unstructured data contained within various types of storage platforms, both onpremises and in the cloud. Data Management

- Metadata Management. Tools for enabling users to share the same systems-of-record semantic model and metadata. These should provide a robust and centralized way for administrators to search, capture, store, reuse and publish metadata objects, such as dimensions, hierarchies, measures, performance metrics/key performance indicators (KPIs) and report layout objects, parameters and so on. Administrators should have the ability to promote a business-user-defined data model to a system-of-record metadata object.

- Self-Contained Extraction, Transformation and Loading (ETL) and Data Storage. Platform capabilities for accessing, integrating, transforming and loading data into a self-contained storage layer, with the ability to index data and manage data loads and refresh scheduling.

- Self-Service Data Preparation. The drag-and-drop, user-driven data combination of different sources, and the creation of analytic models such as user-defined measures, sets, groups and hierarchies. Advanced capabilities include semantic autodiscovery, intelligent joins, intelligent profiling, hierarchy generation, data lineage and data blending on varied data sources, including multistructured data. Analysis and Content Creation

- Embedded Advanced Analytics. Enables users to easily access advanced analytics capabilities that are self-contained within the platform itself or available through the import and integration of externally developed models.

- Analytic Dashboards. The ability to create highly interactive dashboards and content, with visual exploration and embedded advanced and geospatial analytics, to be consumed by others.

- Interactive Visual Exploration. Enables the exploration of data via the manipulation of chart images, with the color, brightness, size, shape and motion of visual objects representing aspects of the dataset being analyzed. This includes an array of visualization options that go beyond those of pie, bar and line charts, to include heat and tree maps, geographic maps, scatter plots and other special-purpose visuals. These tools enable users to analyze the data by interacting directly with a visual representation of it.

- Smart Data Discovery. Automatically finds, visualizes and narrates important findings such as correlations, exeptions, clusters. Links and predictions in data that are relevant to users without requiring them to build models or write algoritms. Users explorer data via visualizations, natural-language-genereted narration, search and NLQ technologies.

- Mobile Exploration and Authoring. Enables organizations to develop and deliver content to mobile devices in a publishing and/or interactive mode, and takes advantage of mobile devices’ native capabilities, such as touchscreen, camera, location awareness and natural-language query. Sharing of Findings

- Embedding Analytic Content. Capabilities including a software developer’s kit with APIs and support for open standards for creating and modifying analytic content, visualizations and applications, embedding them into a business process, and/or an application or portal. These capabilities can reside outside the application (reusing the analytic infrastructure), but must be easily and seamlessly accessible from inside the application without forcing users to switch between systems. The capabilities for integrating BI and analytics with the application architecture will enable users to choose where in the business process the analytics should be embedded.

- Publish, Share and Collaborate on Analytic Content. Capabilities that allow users to publish, deploy and operationalize analytic content through various output types and distribution methods, with support for content search, storytelling, scheduling and alerts.

- Platform Capabilities and Workflow. This capability considers the degree to witch capabilities are offered in a single, seamless product or across multiple products with little integration.

- Easy of Use and Visual Appeal. Easy of use to administer and deploy the platform, create content, consume and interact with content, as well as the visual appeal.

All you need to understand from information this is that the best products on the above criteria are :

QlikView, Tableau, Microsoft for Power BI and SAP Lumira 2.x.

All these products, according to experts meet the specified criteria.

Microsoft

Microsoft offers a broad range of BI and analytics capabilities, both on-premises and in the Microsoft Azure cloud. Microsoft Power BI is the focus for this Magic Quadrant and is on its second major release, offering cloud-based BI with a new desktop interface — Power BI Desktop. Microsoft SQL Server Reporting Services and Analysis Services are not included in the Magic Quadrant evaluation, but are covered in our new Market Guide for enterprise reporting-based platforms. Power BI offers data preparation, data discovery and interactive dashboards via a single design tool. Microsoft continues to support the original Excel-based add-ins that made up the first Power BI release: Power Query, Power Pivot, Power View and Power Map. The Excel-based add-ins are positioned primarily for customers who need an on-premises deployment (and become native in Office 2016). Power BI 2.x offers both desktop-based authoring and browser-based authoring, with applications shared in the cloud. New in this release is hybrid connectivity to on-premises data sources; meaning that not all data must first be pushed and loaded into the Microsoft Azure cloud. Microsoft has substantially lowered the price of Power BI — from its original $39.95 per user per month to $9.95 per user per month — making it one of the lowest-priced solutions on the market today, particularly from larger vendors. The lower price point, in addition to substantial product improvements, explains the strong uptake by 90,000 organizations (according to Microsoft). Microsoft is positioned in the Leaders quadrant, with strong uptake of the latest release, major product improvements, an increase in sales and marketing awareness efforts, new leadership and a clearer, more visionary product roadmap. Microsoft’s vision to provide natural-language query and generation via its Cortana personal digital assistant, together with its strong partner network and its strategy to provide prebuilt solutions, positions it furthest to the right on the Completeness of Vision axis.

STRENGTHS

Microsoft’s cloud-based delivery model and low per-user pricing offers a low TCO — one of the top three reasons why customers selected it, in addition to ease of use for business users and the availability of skilled resources. While Microsoft has long offered low per-user pricing, customers are advised to consider the TCO, which includes hardware costs, development and support costs. Previously, Microsoft had a high cost of ownership in its on-premises deployment model (despite low licensing costs), because of the complexity of implementing multiple servers. The new Power BI addresses this issue with both a streamlined workflow for content authors and because the hardware and server architecture is in the Microsoft Azure cloud. Microsoft ranks in the top quartile for achievement of business benefits, with high scores in its use for monetizing data, improving customer service and increasing revenue, as well as delivering better insights to more users. As customers move to business-user-led deployments, an emphasis on the achievement of business benefits at a lower cost has driven much of the net new BI and analytics buying — in lieu of centrally provisioned, IT-authored reporting platforms. Microsoft was ranked in the top quartile of Magic Quadrant vendors for user enablement (only Tableau ranked slightly higher), with high scores for online tutorials, community support, conferences and documentation. The high enablement scores also contributed to Microsoft’s ranking in the top quartile for product success. Microsoft has continued to expand the number and variety of data sources it supports natively and has also improved its partner network to build out connectors and content that includes prebuilt reports and dashboards. For example, Microsoft now has prebuilt connectors (and content) to Facebook, Salesforce, Dynamics CRM, Google Analytics, Zendesk and Marketo, to name a few.

CAUTIONS

Microsoft Power BI 2.x was released in July 2015. The newness of the product and its cloud-only delivery model may contribute to Microsoft’s ranking in the bottom third for deployment size, with an average of 192 users. Eleven percent of surveyed customers cited the inability to support a large number of users as a limitation to broader deployment. (Note that Power BI 1.0 customers, where deployment sizes may be higher based on both product maturity and on-premises deployment, were not included in the survey.) Microsoft has published a statement of direction — intending to harmonize its on-premises and cloud products — but the strategy is unclear. The current Excel-based add-ins with publishing to on-premises SharePoint is one option. Alternatively, customers may author in Power BI Desktop and then publish to an on-premises partner product such as Pyramid Analytics or Panorama Necto. Microsoft scores low on product capabilities for advanced analytics within Power BI. Even simple forecasting must be done externally within Excel. The vendor’s newly introduced Cortana Analytics Suite — which brings together key modules including: Power BI, Azure Machine Learning, Cortana Personal Digital Assistant, Business Scenarios, and others — may partly address this limitation. Also, with the acquisition of Revolution Analytics, Microsoft now includes a preinstall of a local R instance with Power BI Desktop.

Microsoft was rated in the bottom quartile for breadth of use, which looks at the percentage of users that use the product for a range of BI styles from viewing reports, creating personalized dashboards and doing simple ad hoc analysis, to performing complex queries, data preparation and using predictive models. Microsoft Power BI is mainly being used for parameterized reports and dashboards, but this limited breadth of use may improve as the deployments mature. Microsoft was ranked in the bottom quartile for sales experience by survey references. This can be partly attributed to its frequent changes in pricing and packaging, as well the lack of a BI and analytics-focused sales force. For example, Office 365 is no longer a prerequisite; Power BI can be purchased as a separate SKU, or via Cortana Analytics Suite or Office 365 Enterprise E5.

Qlik

Qlik offers governed data discovery and analytics via its two primary products: QlikView and Qlik Sense. Its in-memory engine and associative analytics allow users to see patterns in data in ways not readily achievable with straight SQL. Both QlikView and Qlik Sense are often deployed by lines of business as well as by centralized BI teams that are building applications for governed data discovery. Qlik Sense was officially released in September 2014, based on modern APIs and an improved interface, and became the vendor’s lead product for new customers in 2015. Qlik Sense Cloud and Qlik Data Market were also released in 2015. Qlik Analytics Platform (QAP) is a solution for developers to build and embed content using the same redesigned engine and Web services APIs upon which the vendor built Qlik Sense. QlikView and Qlik Sense customer experience scores were considered in this evaluation, but Qlik Sense was the primary focus for our product evaluation. Qlik is positioned in the Leaders quadrant, driven by a robust product and high customer experience scores (based on an assessment of Qlik Sense). Its market execution has been tempered by confusion in the marketplace around QlikView and Qlik Sense. This should improve in 2016, with a stronger product, changes in executive leadership and clearer messaging, although its strong partner network may hinder execution of the new positioning of Qlik Sense as the vendor’s lead product. The key components of Qlik’s overall vision — a marketplace, governed data discovery with users able to readily promote content, and increasingly smart data preparation — position it as one of the most complete solutions.

STRENGTHS

Qlik is highly rated for ease of use, complexity of analysis and business benefits (according to its reference customers). Compared with its chief competitors, Tableau and Microsoft, Qlik scores significantly higher on complexity of analysis — which we attribute to its stronger ability to support multiple data sources, a robust calculation engine and associative filtering and search. With a modern BI architecture, power users may become the predominant content developers, instead of IT developers. In this regard, user enablement is more important as users need just-in-time training, online tutorials and community-based resources to support them. Qlik scored in the top quartile (of this Magic Quadrant’s vendors) for user enablement. This score should improve further in 2016, because Qlik recently introduced its Qlik Continuous Classroom. With a rapid implementation approach and an in-memory engine that can handle complex data sources and applications, Qlik scored in the top third for product success. In this regard, Qlik can be used as an extension to a data warehouse or as a data mart for customers that lack a data warehouse. This vendor has continued to introduce smarts into the product to simplify the data load and modeling process. Customers most often choose Qlik for its ease of use, functionality and performance. Qlik’s strong partner network (of more than 1,700) across multiple geographies is a key ingredient in ensuring customer success, which improved in 2015. Product success also improved significantly this year, which can most likely be attributed to a more mature product and improved partner enablement.

CAUTIONS

Cost of software was cited as a barrier to adoption by 29% of Qlik’s reference customers, putting it in the top quartile for this barrier. Qlik Sense uses token-based pricing, which closely aligns to a named user but with some concurrency supported. The degree to which Qlik is considered expensive depends on the point of comparison. Based on user reference responses from last year’s Magic Quadrant, Qlik’s licensing is competitively priced relative to Tableau and is less costly than that of the mega vendors. However, in larger deployments (of more than 500 users), its pricing is 70% higher than chief competitor Tableau and almost double Microsoft’s three-year license fee. Recent contract reviews by Gartner do show increased flexibility in negotiating terms for larger deployments. Qlik scored slightly below average for customer support (which includes level of expertise, response time and time to resolve). There has, however, been a slight improvement over last year’s support scores. Qlik also recently introduced Proactive Support, in which it transparently collects data from customer log files to proactively look for performance issues or events that may impact the server. Twenty-three percent of Qlik’s reference customers cited absent or weak functionality as a platform problem, indicating that Qlik Sense still has some functionality gaps to address — most notably in terms of mobile, advanced analytics, scheduling and collaboration. Qlik has been slow to enter the cloud market directly, relying on its partners for cloud deployments. While Qlik Sense Cloud was introduced in 2015, the current version only provides limited application sharing and authoring for free. A per fee version, Qlik Sense Cloud Plus, was recently introduced for up to 10GB of storage per user. Qlik Sense Enterprise Cloud, with greater administrative control over provisioning users and storage, will be released in stages (beginning in 2016).

Tableau

Tableau offers highly interactive and intuitive data discovery products that enable business users to easily access, prepare and analyze their data without the need for coding. Since its inception, Tableau has been sharply focused on enhancing the analytic workflow experience for users — with ease of use being the primary goal of much of its product development efforts. Tableau’s philosophy has been proven to appeal to business buyers and has served as the foundation for the “land-and-expand” strategy that has fueled much of its impressive growth and market disruption. Tableau is one of three vendors positioned in the Leaders quadrant this year. Despite increased pressure in 2015 from a growing number of competitors, Tableau has continued to execute and expand in organizations and win net new business to maintain its growth rate. Tableau’s efforts to build product awareness and win mind share globally have contributed to its Completeness of Vision, in addition to an increased focus on smart data preparation and smart data discovery capabilities on the product roadmap.

STRENGTHS

Tableau continues to execute better than any vendor in the BI market and its land-and expand sales model has performed extremely well, resulting in a dramatic increase in large enterprise deals — many of which started out as small desktop deployments that grew organically over time within organizations. Tableau has the third-largest average deployment size of all the vendors included in this Magic Quadrant — at 1,927 users — driven by 42% of organizations reporting average deployments of more than 1,000 users (which probably reflects the approach that Tableau has taken of leveraging an underlying data warehouse if one exists). A core strength of Tableau is its versatility, both in terms of deployment options across cloud and on-premises as well as the use cases it can be deployed against. According to the reference survey, there are as many deployments of Tableau supporting centralized BI provisioning as there are for decentralized analytics. Some organizations prefer to use Tableau to empower centralized teams to provision content for consumers in an agile and iterative manner, while others adopt more of a hands-off approach and enable completely decentralized analysis by business users. In response to best practices to strike a balance between the stability and consistency that comes with centralization and the agility offered by decentralization, Tableau continues to promote its Drive methodology — which probably contributed to the high percentage of governed data discovery use cases cited by its survey references. Tableau’s focus on making its customers successful is evident in its top overall rating for customer enablement. Tableau offers a vast array of learning options — including online tutorials, webinars and hands-on classroom-based training — to educate and empower its users, which has increased the number of skilled Tableau resources available in the market. Attendance at Tableau’s user conference topped 10,000 attendees in 2015, nearly double the 2014 attendance and an increase of more than 50 times the 187 attendees at its inaugural user conference in 2008. In addition to directly enabling its customers, Tableau has built an extensive network of Alliance Partners with expertise in its implementations. Tableau’s core product strengths continue to be its diverse range of data source connectivity, which is constantly expanding, as well as its interactive visualization and exploration capabilities. This combination delivers on Tableau’s mission of helping people see and understand their data by enabling rapid access to virtually any data source, which nontechnical users can immediately begin interacting with — through an intuitive visual interface — to iteratively ask and answer questions and discover new insights.

CAUTIONS

While expansion continues to be strong for Tableau, pricing and packaging is being more heavily scrutinized because larger deals typically involve IT and/or procurement. When asked about limitations to a wider deployment, 44% of Tableau’s survey references cited the cost of software as a barrier. With increased price sensitivity in this market, new lower-priced market entrants — coupled with Tableau’s reluctance to respond with a more attractive enterprise pricing model — have probably affected its sales execution survey rating this year and contributed to the drop in its position on the Ability to Execute axis compared with last year (where Tableau dramatically outperformed the competition). Reference survey input suggests that Tableau is experiencing the growing pains that often accompany rapid growth — as vendors struggle to scale to meet support demands for more complex deployments (as indicated by Tableau’s overall support score from its client references, which was below the vendor average for this Magic Quadrant). The reference survey also suggests that buyers of Tableau have encountered some software limitations as they attempt to scale their deployments (to meet the demands of more users trying to solve more complex problems) and govern those deployments (as they continue to expand within its customer organizations). Tableau’s client references ranked it in the bottom third of all Magic Quadrant vendors for complexity of analysis. As customers reach the limits of Tableau’s current capabilities, this may dampen customer enthusiasm.

Despite efforts to improve its data preparation capabilities in version 9, Tableau still has weaknesses in the area of data integration across data sources. Tableau supports a diverse range of data connectivity options — spanning relational, online analytical processing (OLAP), Hadoop, NoSQL and cloud sources — but offers little support when it comes to integrating combinations of these sources in preparation for analysis. In order to compensate for this weakness, a growing number of Tableau customers have turned to vendors specializing in self-service data preparation that offer an option to output to Tableau’s native Tableau data extract (TDE) format. This is a concern for Tableau, because it creates the need for its solution to be deployed with another tool — which magnifies the TCO concerns that already exist within its customer base. Of greater concern, is that the shifting of data preparation to a separate product could potentially marginalize Tableau as the front-end visualization space becomes increasingly commoditized and more difficult to differentiate.

SAP Lumira 2.x

Data visualization product SAP Lumira (SAP BusinessObjects Modern BI tool) has been on a quarterly release schedule, and sometimes even more frequently than that in the past as the vendor has tried to compete with Tableau, Qlik and other more established market players. SAP will now halt Lumira releases until summer 2017 when the vendor plans to release Lumira 2.0 the successor not only to the current Lumira 1.31 version but also to SAP Design Studio 1.6. SAP intends to merge Lumira and Design Studio into a single product, with two different functionalities.

STRENGTHS

The sophisticated user Stocker is referring to is the Design Studio user. Combining the two products is part of an ongoing BI convergence strategy for SAP, which began with SAP Predictive Analytics 2.0 in 2015, when the former KXEN Infinite Insight product was merged with SAP Predictive Analytics.

Similar to the way that Predictive Analytics 2.0 features two segments—the former Infinite Insight side with its automated algorithms and the “expert” side which has a more traditional code-based approach to statistical analysis— Lumira 2.0 will feature the “Discovery” functionally reflective of current versions of Lumira and “Design,” which will maintain the capabilities of Design Studio.

Lumira 2.0 encompasses two different use cases—one is the business user who wants the easy user experience to start playing with the data. The other use case is the professional designer who wants more flexibility with custom actions.”

One feature of the product merger th at Stocker highlights is bringing the onlne capability of Design Studio—along with its ability to connect with HANA—together with the offline capability of Lumira, as well as its ability to connect to other data sources, including BusinessObjects Universes.

With Lumira 2.0 you can take simple analysis done by a business user and turn it into a corporate dashboard—operationalize it,” says Ramu Gowda, product manager at SAP, who presented alongside Stocker.

CAUTIONS

Support continues to be a serious concern for clients, receiving among the lowest scores in the survey for support-related ratings and the lowest overall support ranking of all the Magic Quadrant vendors.

You can choose any product from the group of leaders and will be satisfied if you prepare a good data model. Several recipes for successful product implementation are described below.

BEST PRACTISE FOR IMPLEMENTATION BI TOOLS

You can choose any product from the square of leaders, but this does not guarantee that the developers will make you a high-quality reporting that you will be comfortable using to make important and operational decisions. Below I will give a few important points to look at in order to get the desired result and a reliable analytical reporting system.

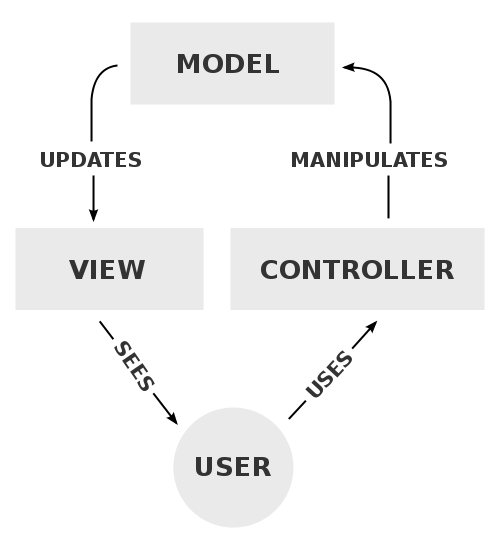

Use MVC pattern if you want receive good Business Intelligence System.

Divide your system into three parts and control each one to get the best result.

Model–View–Controller (MVC) is a software architectural pattern for implementing user interfaces on computers. It divides a given application into three interconnected parts in order to separate internal representations of information from the ways that information is presented to and accepted from the user. The MVC design pattern decouples these major components allowing for efficient code reuse and parallel development.

Traditionally used for desktop graphical user interfaces (GUIs), this architecture has become popular for designing web applications and even mobile, desktop and other clients.

In a conversation form :

View: “Hey, controller, the user just told me he wants item 4 deleted.”

Controller: “Hmm, having checked his credentials, he is allowed to do that… Hey, model, I want you to get item 4 and do whatever you do to delete it.”

Model: “Item 4… got it. It’s deleted. Back to you, Controller.”

Controller: “Here, I’ll collect the new set of data. Back to you, view.”

View: “Cool, I’ll show the new set to the user now.”

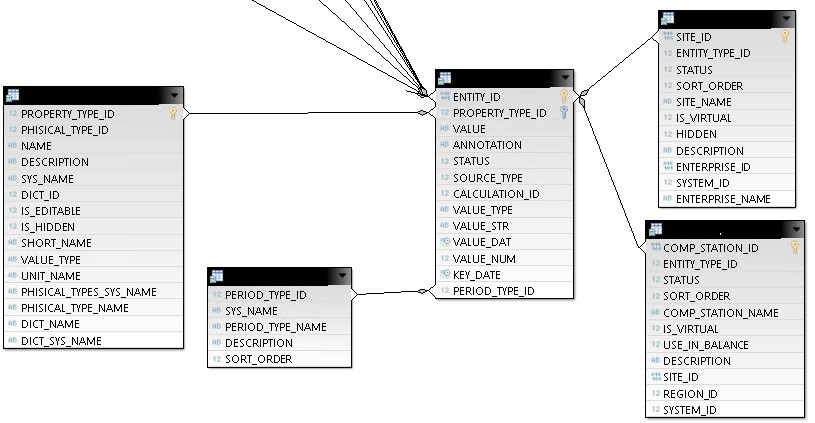

MODEL – the CENTRAL and most important part of your analytical application.

In fact, there is a universal model for storing any business information.

The optimal option for business reporting is the “star schema“.

The second acceptable option is the “snowflake scheme“.

Control that developers adhere to these formats, and tables inside should not be duplicated. With this format, in the center always exist the optimal “Fact table”. The system will be fast and flexible.

FACT Table needs only 13 universal columns:

- Entity_ID (varchar 16) – unique id of any dimensions .

- Property_Type_ID () – id of mesure type (1,2,3,4 …)

- Value – single entry point to data

- Annotation – comment

- Status – data status (active, inactive, etc)

- Source_Type – type of source

- Calculation_ID – id of calculation

- Value_Type – type of data (int, char, datatime etc)

- Value_STR – mesure for data with string type

- Value_NUM – mesure for data with decimal type

- Value_DAT – mesure for data with datetime type

- Key_Date – datetime dimention

- Period_Type_ID – timeframe dimension (hour, day, month, year, etc.)

Other tables contain descriptions of analytical dimensions shown above on the picture.

IMPORTANT! The fact table stores only reference keys for communicating with tables that contain additional information about directories.

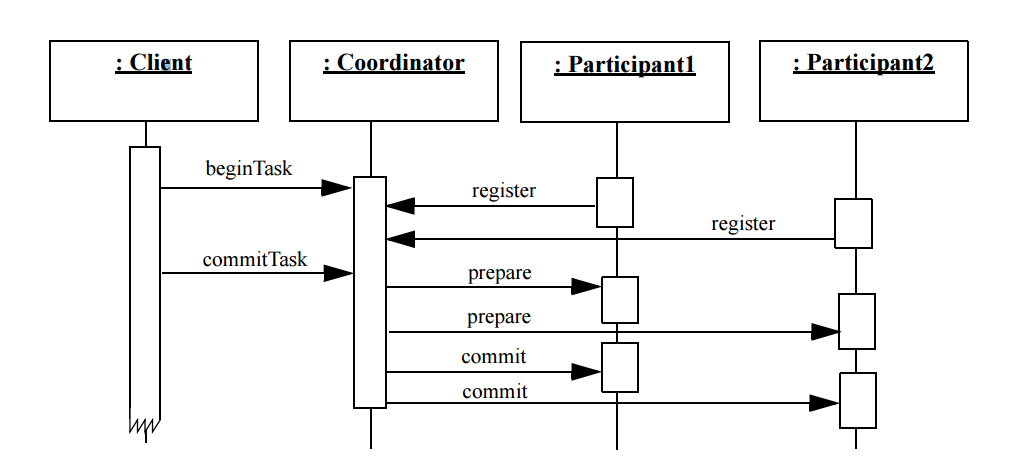

CONTROLLER – A controller is the link between a user and the system. It provides the user with input by arranging for relevant views to present themselves in appropriate places on the screen. It provides means for user output by presenting the user with menus or other means of giving commands and data. The controller receives such user output, translates it into the appropriate messages and pass these messages on to one or more of the views. How the elements of the report will work with each other and with the data model.

The best way to use the design patterns “Coordinator” or “Observer”.

The following sequence diagram depicts a scenario involving two participants with the prepare phase of one of the second participant failing. The Coordinator subsequently aborts the task asking the first participant that succeeded in the prepare phase to revert any changes that it might have made.

VIEW – The analytical application should be intuitively clear, and work with it should be in a “few clicks”. So, I would like introduce you DAR methodology.

The Dashboard, Analysis, Reporting (DAR) methodology is a foundation you can build all of your applications on while still having room to be creative and meet the varying requirements of individual clients / prospects.

In a nutshell you lead with a Dashboard page, followed by Analysis pages, and finish with Reporting pages. The Dashboard gives the high level overview of the business, the Analysis pages give interactive user-driven controls to filter the data, while the Reporting pages give the most granular details.

CONCLUSION

Gartner does a wonderful job every year for your benefit. Focus on leaders, what to be a leader. Choose tools that, according to user feedback, scored the most points – in our case it is Leader’s quadrant. Statistics can not be deceived.

Do a decomposition of your goal and to achieve the best result, follow the approaches outlined above. Take very little time (15 minutes), controlling the development process, the atmosphere in the team, the team participants and you will be very surprised by the difference in the results.

Good luck.

Subscribe now

Subscribe now and get weekly free news letters and access to many free articles, researches and white papers. You can subscribe by creating your account or by entering your e-mail address in the following subscribe text box.